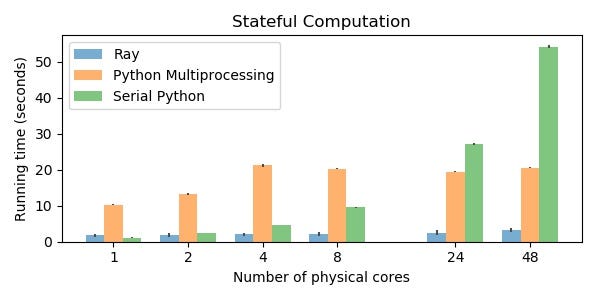

10x Faster Parallel Python Without Python Multiprocessing | by Robert Nishihara | Towards Data Science

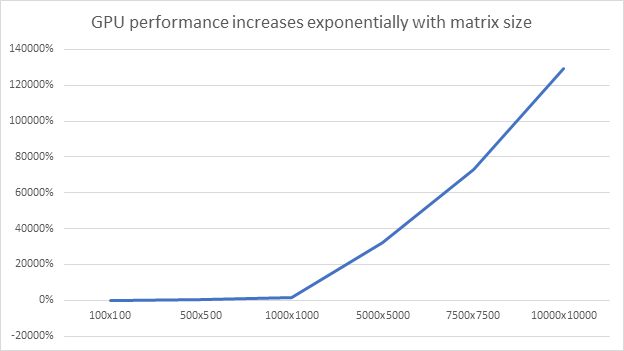

Accelerating Sequential Python User-Defined Functions with RAPIDS on GPUs for 100X Speedups | NVIDIA Technical Blog

Why is the Python code not implementing on GPU? Tensorflow-gpu, CUDA, CUDANN installed - Stack Overflow

GPU parallel computing for machine learning in Python: how to build a parallel computer , Takefuji, Yoshiyasu, eBook - Amazon.com

Productive and Efficient Data Science with Python: With Modularizing, Memory Profiles, and Parallel/Gpu Processing (Paperback) | Hooked

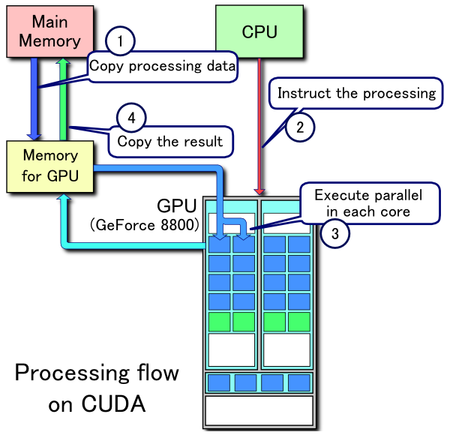

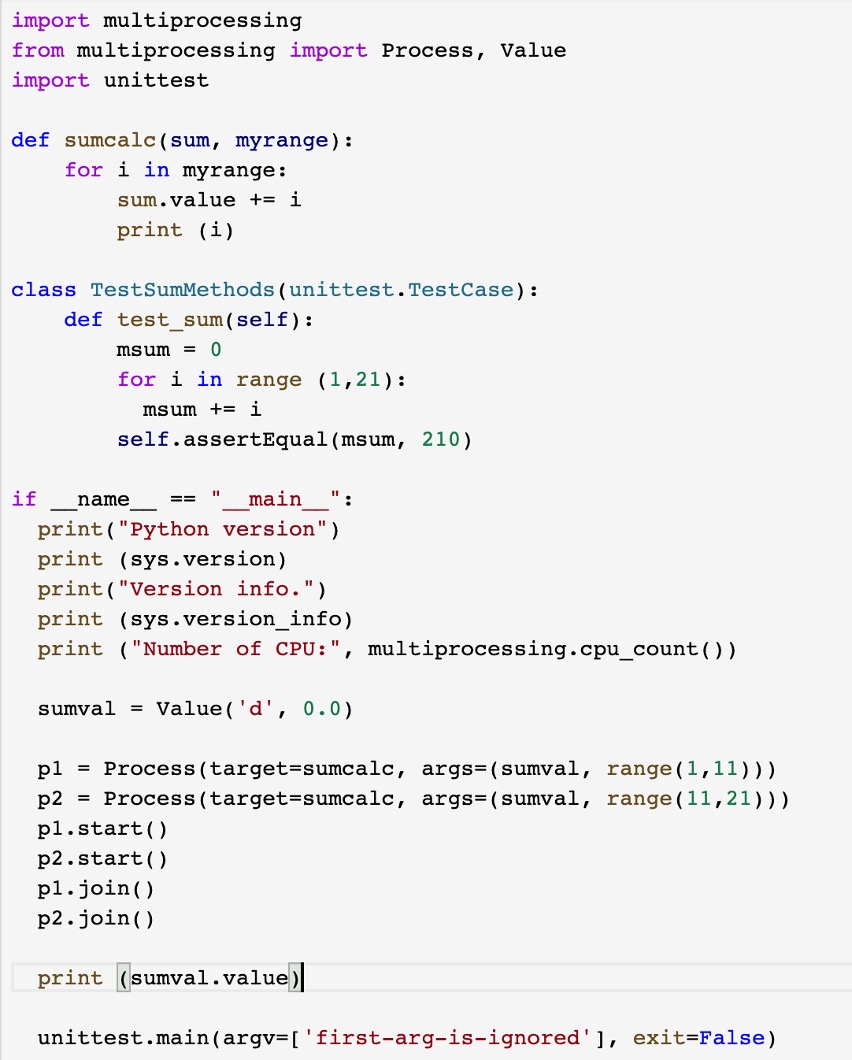

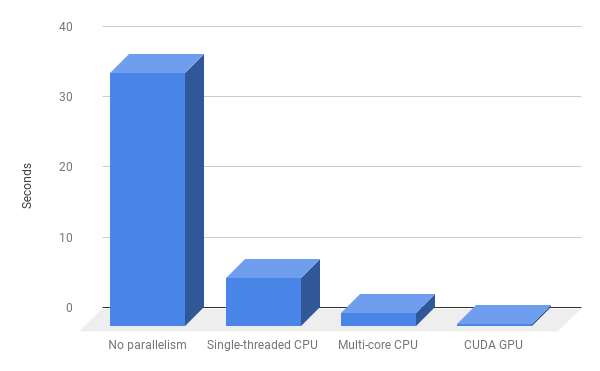

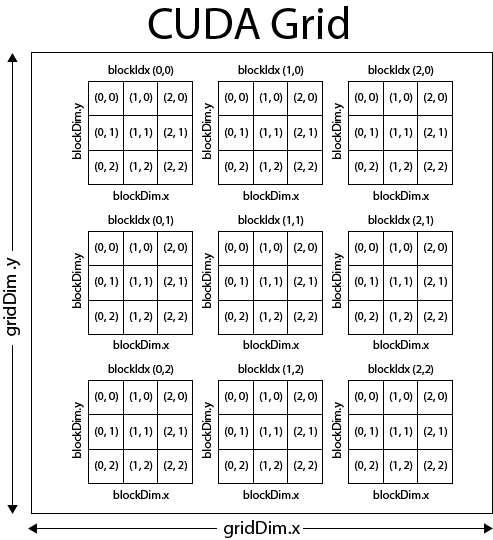

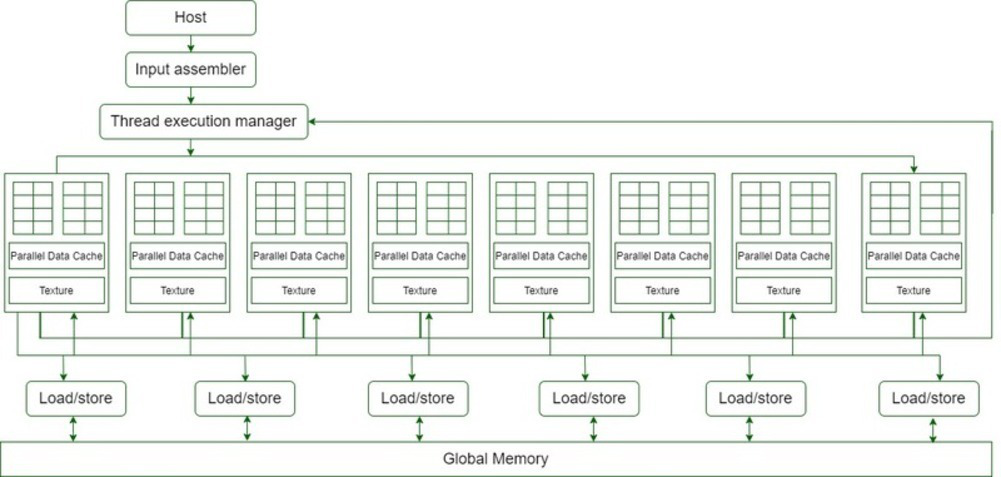

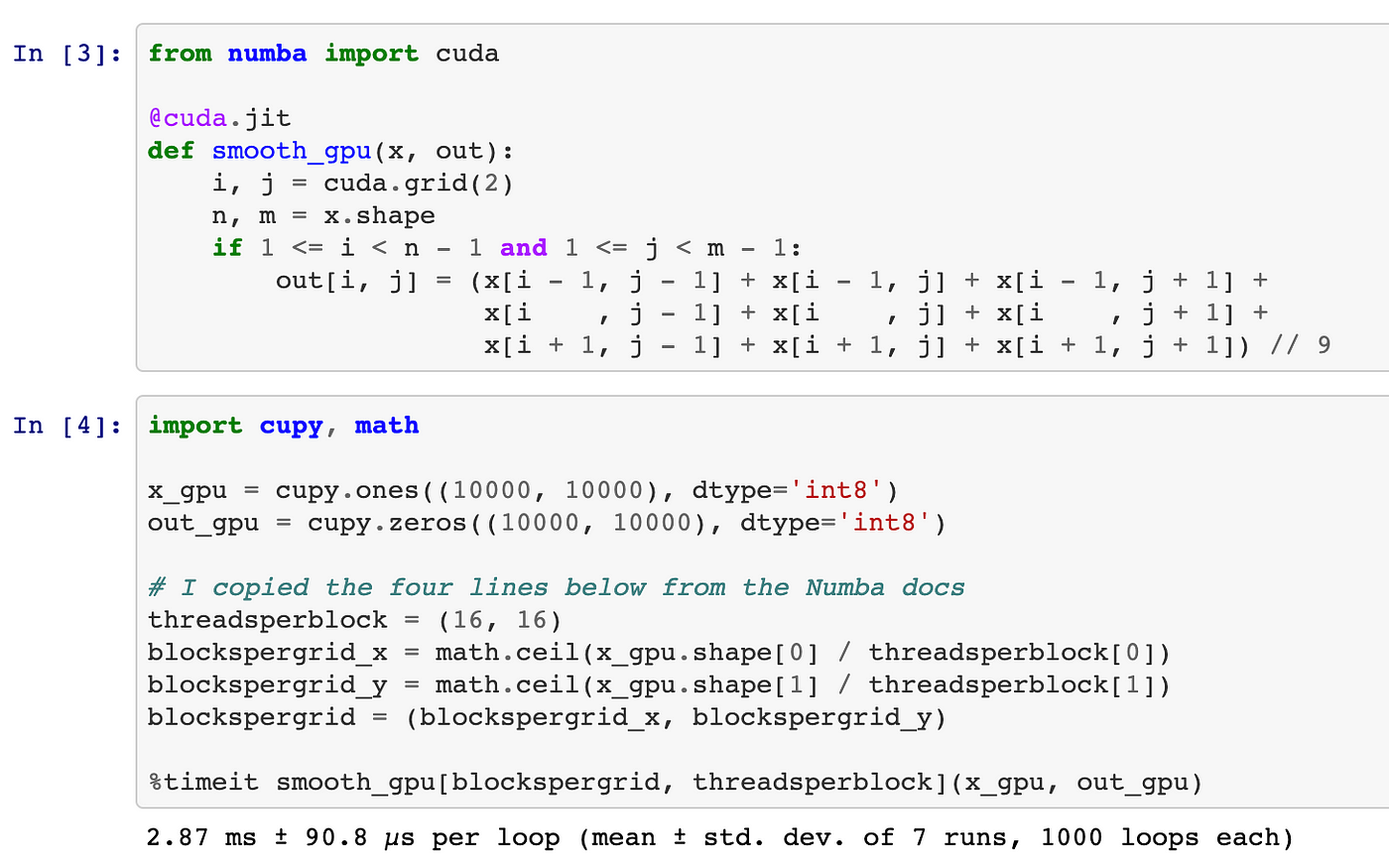

A Complete Introduction to GPU Programming With Practical Examples in CUDA and Python - Cherry Servers

A Complete Introduction to GPU Programming With Practical Examples in CUDA and Python - Cherry Servers

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

Parallelizing across multiple CPU/GPUs to speed up deep learning inference at the edge | AWS Machine Learning Blog