![PDF] Asynchronous Distributed Neural Network Training using Alternating Direction Method of Multipliers | Semantic Scholar PDF] Asynchronous Distributed Neural Network Training using Alternating Direction Method of Multipliers | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/f1ed45db91d225a1744c2f614bdfaf40fd7c0624/3-Figure1-1.png)

PDF] Asynchronous Distributed Neural Network Training using Alternating Direction Method of Multipliers | Semantic Scholar

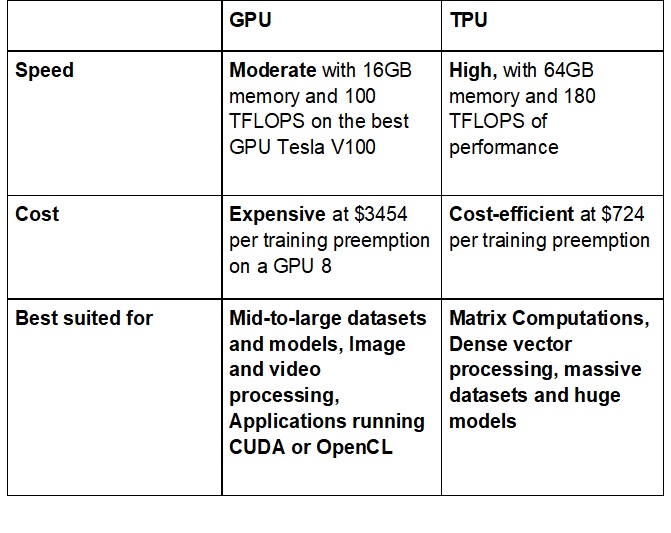

What are the downsides of using TPUs instead of GPUs when performing neural network training or inference? - Data Science Stack Exchange

Researchers at the University of Michigan Develop Zeus: A Machine Learning-Based Framework for Optimizing GPU Energy Consumption of Deep Neural Networks DNNs Training - MarkTechPost